Last year marked a pivotal year in the development of AI agents, setting the stage for significant advancements in 2025. As businesses and developers delve deeper into the potential of AI technology, the importance of understanding the evolving landscape, applications, and frameworks of AI agents cannot be overstated. The trajectory of AI agent technology is sharply upward, driven by heavy investment in research and development from key players like Anthropic, OpenAI, and Microsoft. These entities are introducing increasingly sophisticated models that promise to enhance automation and decision-making across diverse industries such as healthcare, finance, and customer service.

What are AI Agents?

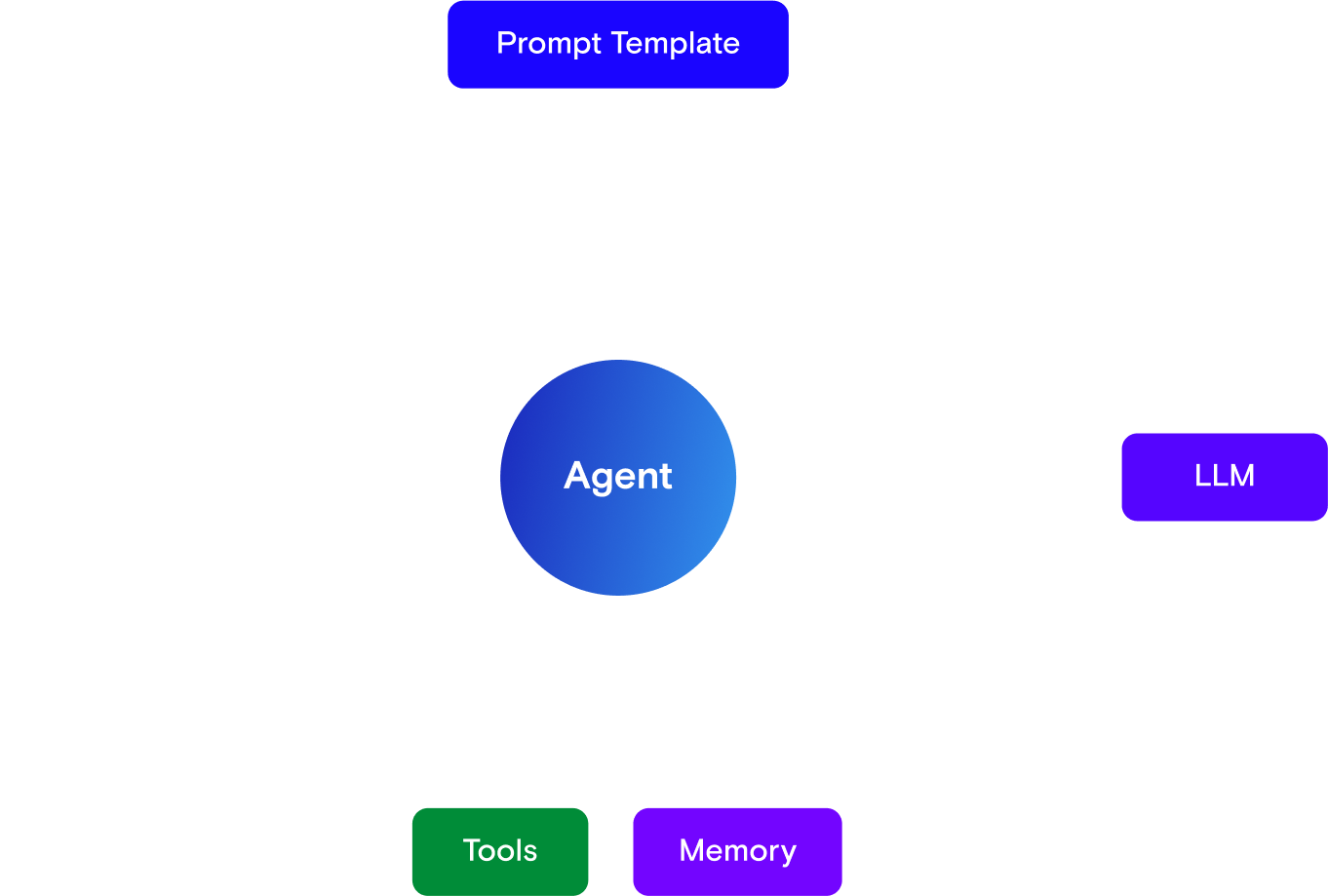

AI agents are sophisticated systems powered by large language models (LLMs) that autonomously or semi-autonomously perform tasks ranging from simple data retrieval to complex problem-solving. These agents are engineered to interpret system prompts, utilize digital tools, and access persistent or in-store memory, enabling them to operate independently or within orchestrated systems. Their versatility allows them to adapt across various sectors, handling repetitive tasks to free up human operators for complex duties and enhancing productivity through seamless integration into existing IT infrastructures, making them invaluable for scaling operations efficiently.

Anthropic’s Take on AI Agents

Anthropic, a leader in AI research, offers a nuanced perspective on the definition of AI agents. They observe that the term “agent” can be interpreted in several ways, ranging from fully autonomous systems that operate independently for extended periods to more prescriptive implementations that follow predefined workflows. Anthropic embraces these variations under the umbrella of agentic systems but emphasizes a crucial architectural distinction between workflows and agents:

- Workflows are systems where LLMs and tools are orchestrated through predefined code paths.

- Agents, on the other hand, are systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks.

This differentiation is vital as it highlights the varying degrees of autonomy and complexity in AI systems. Workflows, as defined by Anthropic, offer a structured approach where tasks are executed according to set rules and sequences. This can be particularly advantageous in environments that require high reliability and predictability.

Conversely, agents represent a more flexible and dynamic approach. These systems are capable of self-direction, making them suitable for tasks that benefit from adaptation and learning over time. This makes agents ideal for scenarios where decision-making needs to consider fluctuating data or where the system must evolve its approach to problem-solving based on new information.

In the detailed exploration of agentic systems, Anthropic clarifies how both workflows and agents can be leveraged to address diverse needs. The choice between deploying a workflow or an agent depends largely on the complexity of the task and the required level of autonomy.

Let’s look at some of the popular AI agent frameworks in 2025.

AI Agent Frameworks

The AI agent framework landscape is diverse, featuring powerful tools such as LangGraph, Amazon Bedrock, Microsoft Autogen, Google Genkit, Crew AI, LlamaIndex, and the OpenAI Assistant SDK. Each framework offers unique strengths tailored to specific technological needs and organizational environments.

Framework Specialties and Use Cases:

- LangGraph: Excels in managing complex agent networks with a robust routing architecture. Ideal for applications requiring detailed customization of search functions and task division.

- AutoGen: Focuses on building conversational AI applications with dynamic agent interactions. Well-suited for enterprises and developers aiming to create sophisticated conversational interfaces.

- Amazon Bedrock: Integrates deeply with AWS services, providing extensive support for deploying AI agents in the cloud. Perfect for businesses leveraging cloud scalability for large-scale AI deployments.

- Google Genkit: Offers tools for rapid deployment of AI solutions, emphasizing ease of use and accessibility. Best for companies looking for quick setup and straightforward integration of AI capabilities.

- Crew AI: Provides a modular platform that supports customizable agent architectures. Fits well with organizations that require flexible and adaptable AI solutions to meet evolving business needs.

- LlamaIndex: Specializes in enhancing data retrieval and management within AI interactions. Ideal for applications that demand efficient handling and indexing of large datasets.

- OpenAI Assistant SDK: Utilizes OpenAI’s cutting-edge language models to facilitate advanced conversational agent development. Excellent for creating agents that require deep contextual understanding and responsiveness.

Each of these frameworks brings something unique to the table, enabling businesses to tailor their AI strategies to best fit their specific requirements.

Let’s see how AI agents are being applied to different industries.

Use Cases for AI Agents in 2025

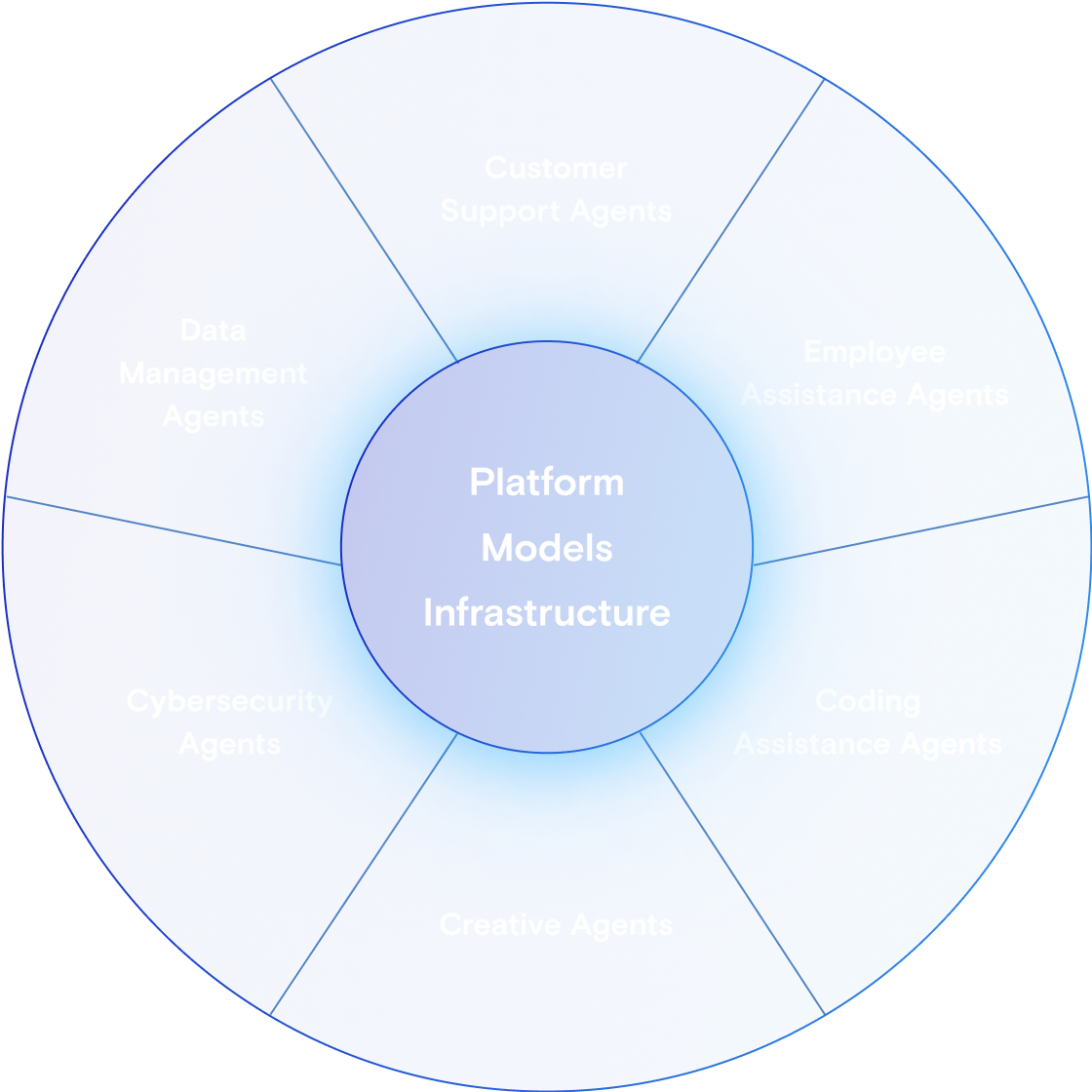

AI agents are revolutionizing multiple sectors by automating routine tasks, enhancing decision-making, and providing new insights from data analysis. In customer support, AI agents can personalize interactions based on user history, while in the backend of software development, they can automate testing and bug fixes, significantly speeding up the development process.

AI agents are poised to transform multiple sectors by enhancing efficiency and personalizing user experiences across various industries:

Customer Support Agents

AI agents in sectors like banking, insurance, and healthcare personalize customer interactions by analyzing historical data to provide targeted assistance. This improves resolution times and customer satisfaction.

Employee Assistance Agents

In the workplace, AI agents automate routine tasks such as coding, system analysis, and candidate screening, freeing employees to focus on more complex problems. In healthcare, these agents assist with data and imagery analysis, enhancing diagnostic and treatment processes.

Coding Assistance Agents

Embedded in tools like GitHub Copilot and Visual Studio, these agents assist developers by suggesting code, completing lines, and offering documentation, speeding up development and reducing errors.

Data Management Agents

These agents automate the collection, cleaning, and analysis of data, enabling organizations to quickly process large volumes and make informed decisions without manual intervention.

Cybersecurity Agents

Cybersecurity agents monitor networks, detect threats, and respond to anomalies in real-time, crucial for protecting sensitive data and maintaining privacy in an increasingly digital world.

Creative Agents

In creative fields, AI agents assist in generating visual content, editing media, and even crafting music, significantly reducing production time and enabling creativity at scale.

Understanding Agent Patterns and Workflows in AI Systems with Anthropic

In the rapidly evolving field of artificial intelligence, understanding how AI agents operate through specific patterns and workflows is crucial for leveraging their full potential. Below, we delve into the common agent patterns and workflows that enhance the capabilities and efficiency of AI systems.

Agent Patterns Explained

Augmented LLMs (Large Language Models): These models are pivotal in enhancing basic LLM capabilities by integrating additional tools and memory. According to Anthropic, this setup represents their simplest workflow within the realm of agent patterns. It closely resembles what they consider a classic agent. Typically known as a classic RAG (Retrieval Augmented Generation) workflow, this process involves the LLM accessing a database—usually a vector database—to gather necessary data. The LLM may then use various tools to process this data, and it taps into its memory to consider past interactions before producing a response. This approach significantly improves the quality of interactions, providing responses that are not only precise but also contextually aware, making them more relevant and useful.

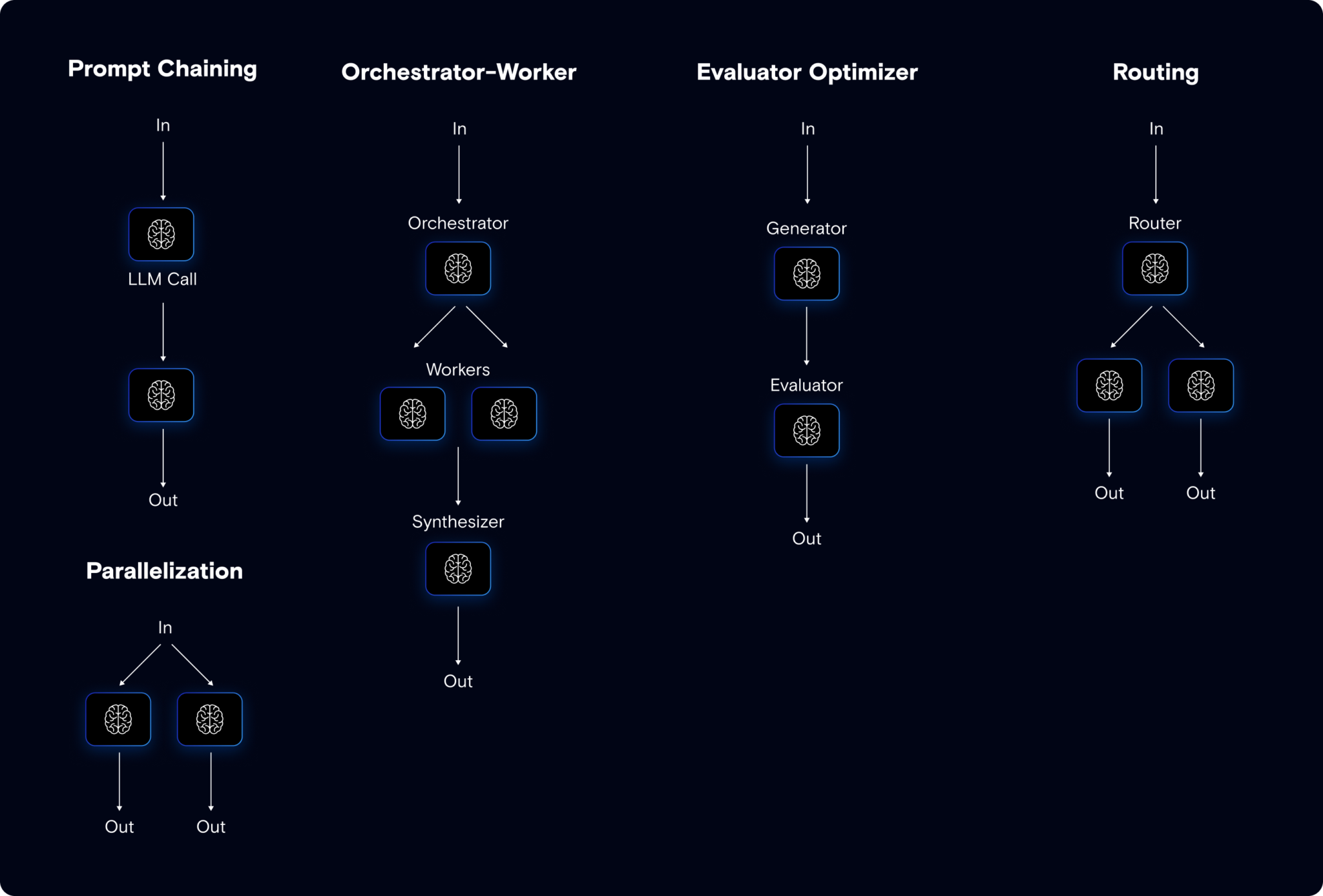

Prompt Chaining: Prompt chaining is an effective method for managing complex tasks by breaking them into sequential steps. This approach is crucial because large tasks can overwhelm AI models, leading to poor outcomes. For example, instead of asking an AI to build an entire e-commerce site, the task can be split into smaller parts, such as developing the backend or setting up microservices on AWS. This method allows for detailed, focused processing of each component, improving the AI’s performance and results. Anthropic recommends prompt chaining as a best practice for complex AI-driven projects

Routing: Routing is a compelling workflow pattern that plays a critical role in directing tasks efficiently within AI systems. In this setup, an LLM router acts as the decision-maker that determines which specific LLM should handle an incoming request.

This process involves several specialized LLMs, each configured to perform distinct tasks. They are equipped with their own system prompts and may have access to specialized tools or even different LLM configurations. For instance, the first LLM might be finely tuned for one type of task, while others might be optimized for different areas.

Parallelization: Parallelization is a key workflow pattern where a complex task is divided into smaller, more manageable segments and distributed across multiple LLMs. This division allows each segment of the task to be processed simultaneously, significantly enhancing the efficiency of operations. Unlike routing, where tasks are sent to different LLMs based on their specialization, parallelization focuses on executing multiple tasks concurrently to speed up the overall process. An aggregator function then collects and combines results from all segments, ensuring a cohesive output. This method is particularly valuable in scenarios where speed is crucial and tasks can be naturally segmented to operate in parallel, thus shortening response times and improving performance.

Orchestrator-Workers:The orchestrator-workers model enhances task management within AI systems. This pattern involves an orchestrator or supervisor that breaks down complex tasks into manageable units and assigns them to specialized worker agents. For example, in financial research, the orchestrator delegates specific economic data tasks—like GDP analysis or gas price monitoring—to agents skilled in those areas.

Each agent activates only when needed, improving efficiency by focusing on their expertise. After completing their tasks, the results are consolidated by a synthesizer node, which integrates the outputs into a cohesive final report. This system is particularly effective in scenarios requiring diverse and specialized knowledge, ensuring precise and efficient task execution.

Evaluator-Optimizer: The Evaluator Optimizer pattern involves a feedback loop where one AI model generates an output and another evaluates and refines it. For instance, in software development, a coder agent writes code that a reviewer agent evaluates for quality. The reviewer provides feedback, which the coder uses to enhance the code. This cycle repeats until the code meets quality standards, ensuring continuous improvement and precision in the outputs.

Autonomous Agents: Autonomous Agents independently handle complex, open-ended problems within AI systems, similar to the functionality seen in Microsoft’s Autogen framework. These agents autonomously perform actions based on real-time data and feedback, adapting their responses as needed without human oversight. For example, an autonomous agent tasked with creating a sign-up form for an online banking system might generate code, test it, and revise it based on the outcomes of those tests, continuing this cycle until the code meets the specified standards. This self-guided process allows for high adaptability but can also lead to unpredictability in performance and costs, requiring careful monitoring and management to ensure efficiency.

Choosing an AI Agent Framework

Selecting an AI agent framework requires careful consideration of various factors to ensure it aligns perfectly with your project’s needs and goals. Here’s how to evaluate potential frameworks based on critical areas:

Use Cases

Understand the specific scenarios and challenges your project will address. Each framework has strengths in different areas such as transaction processing, natural language understanding, or real-time analytics. Identify frameworks that excel in the use cases most relevant to your project, ensuring they can effectively handle the tasks you will assign to your AI agents.

Criticality of the Project

Assess the importance of the AI system in your project’s overall success. If the AI component is critical, opt for a framework known for reliability and robust performance under pressure. This consideration will help you prioritize features like system stability and support in case of downtimes or anomalies.

Team Skills

Consider the technical capability of your team. If your team is not proficient in a complex framework, you might need a solution that is more user-friendly or well-documented. The chosen framework should align with your team’s skills to minimize the learning curve and facilitate a smoother development process.

Time and Budget Constraints

Evaluate the time you have to implement the AI solution and the budget available. Some frameworks may offer rapid deployment options but at a higher cost. Conversely, more cost-effective solutions might require a longer setup time. Balance these factors based on your project’s schedule and financial resources.

Integration with Existing Systems

The framework should integrate seamlessly with your existing IT infrastructure. Check for compatibility with your current systems, databases, and application programming interfaces (APIs). A framework that is difficult to integrate can lead to increased costs and extended timelines.

AI Ops

Consider how the framework supports AI operations management, including deployment, monitoring, scaling, and updates. Good AI Ops practices ensure that your AI systems run efficiently and continue to improve over time without requiring constant manual oversight. Look for frameworks that offer tools for automating these operations, provide good monitoring capabilities, and support scalable deployments.

Conclusion

In conclusion, the evolution of AI agents in 2025 promises transformative impacts across all sectors, automating routine tasks and redefining interaction paradigms with intelligent systems. This exploration into the capabilities, frameworks, and operational patterns of AI agents offers a roadmap for businesses aiming to integrate advanced AI solutions. By adopting these technologies, organizations can enhance efficiency, improve decision-making, and secure a competitive edge in the digital era, ensuring they remain at the forefront of innovation and operational excellence. Visit Atompoint now to look into how you can enhance your business workflows with the help of AI.